Samsung embeds AI into high-bandwidth memory to beat up on DRAM

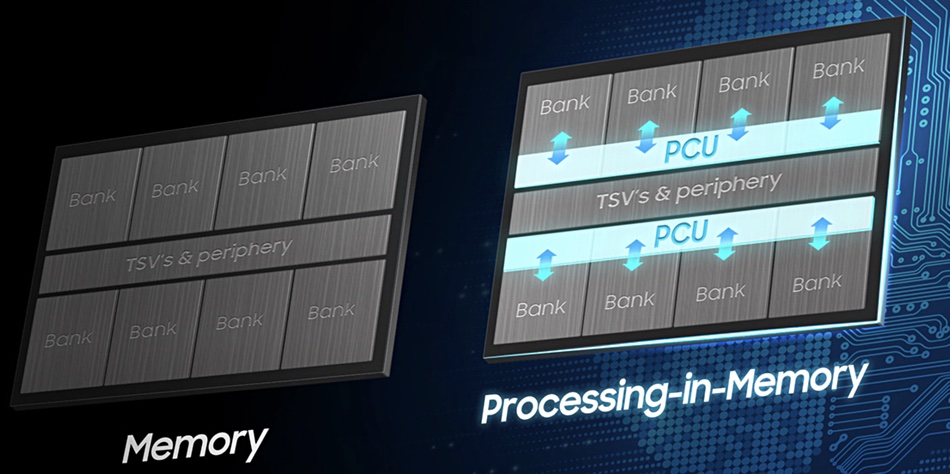

Samsung has announced a high bandwidth memory (HBM) chip with embedded AI that is designed to accelerate compute performance for high performance computing and large data centres. The AI technology is called PIM – short for ‘processing-in-memory’. Samsung’s HBM-PIM design delivers faster AI data processing, as data does not have to move to the main […]

Tech Day 2022] DRAM Solutions to Advance Data Intelligence

Samsung announces first successful HBM-PIM integration with Xilinx Alveo AI accelerator

BALD Engineering - Born in Finland, Born to ALD: memory

Samsung Brings In-Memory Processing Power to Wider Range of Applications

Tech Day 2022] DRAM Solutions to Advance Data Intelligence

Samsung and SK Hynix rumored to boost AI accelerator performance with the advent of the HBM4 DRAM standard - News

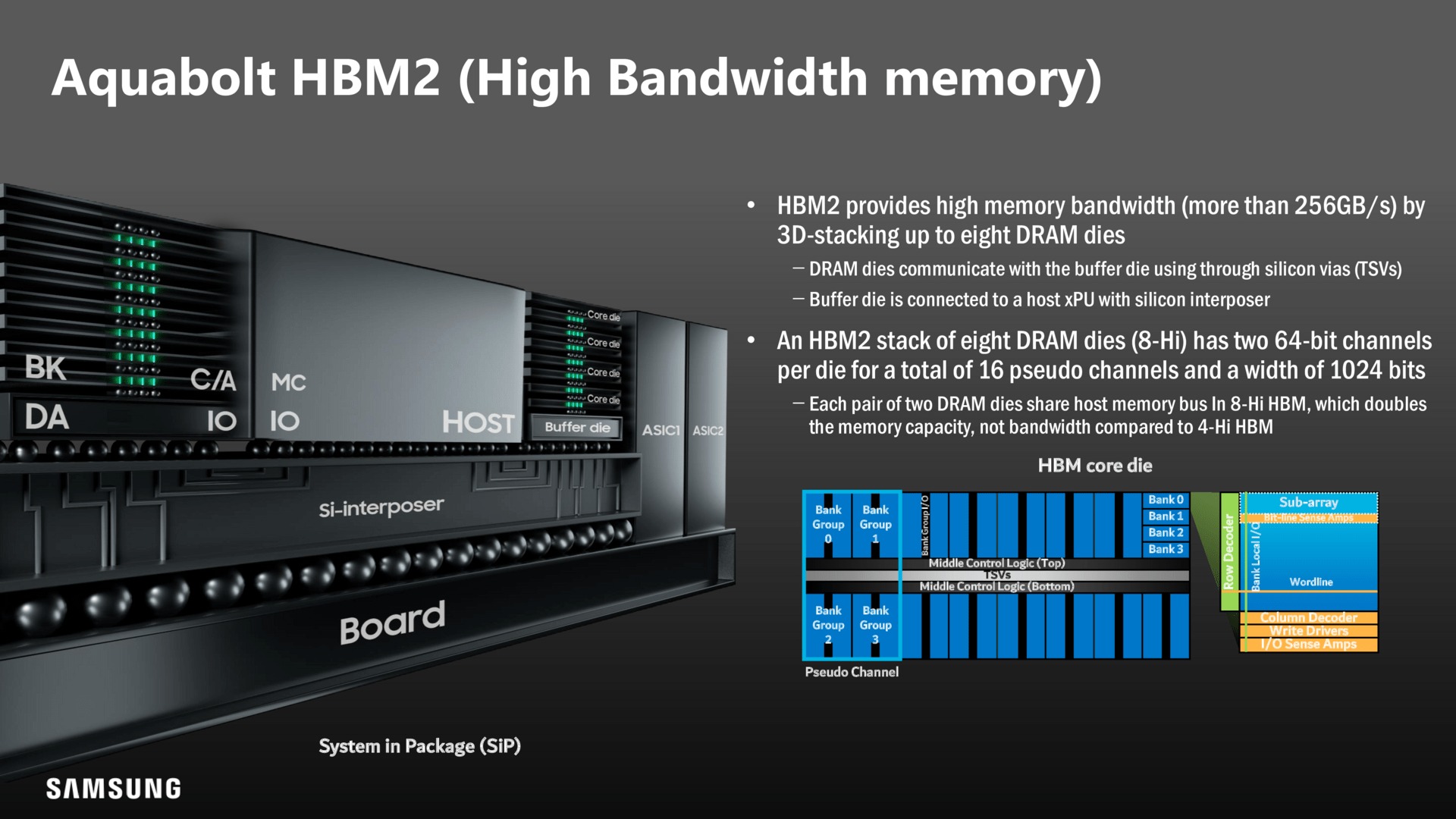

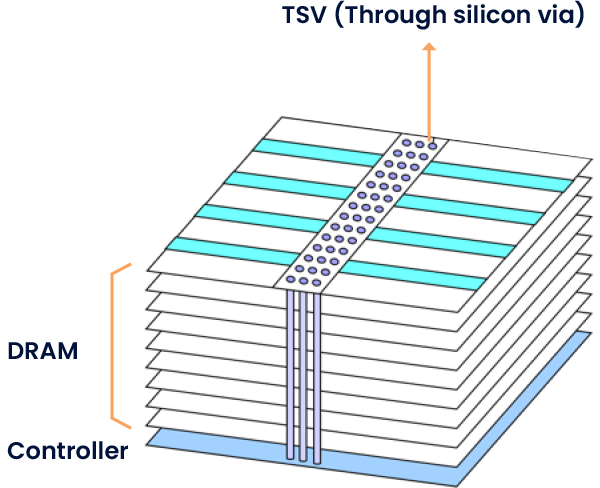

HBM Takes On A Much Bigger Role

Next-Gen Memory Ramping Up

Investors Bet Samsung's Smaller Memory Chip Rival SK Hynix Will Be an AI Winner

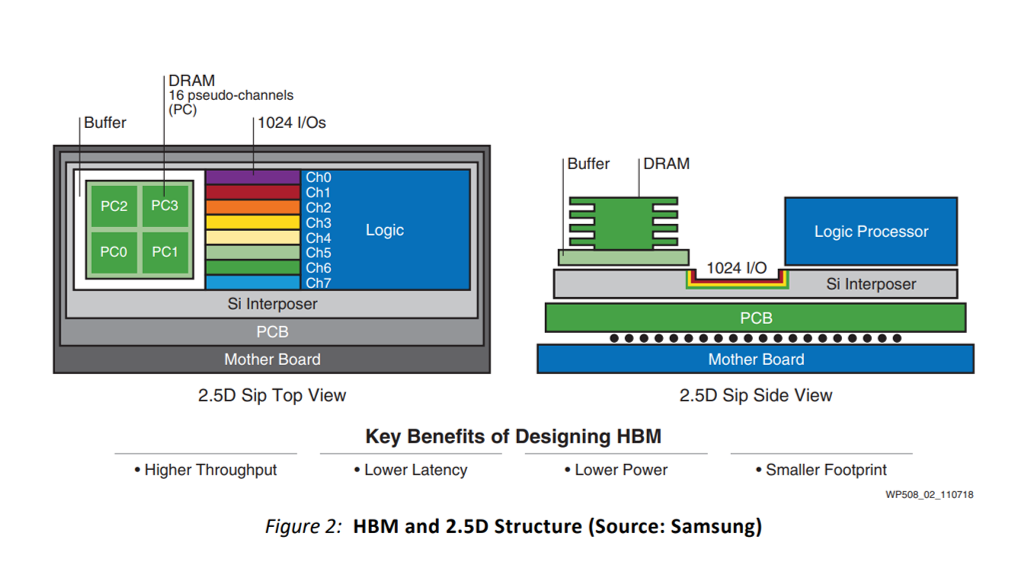

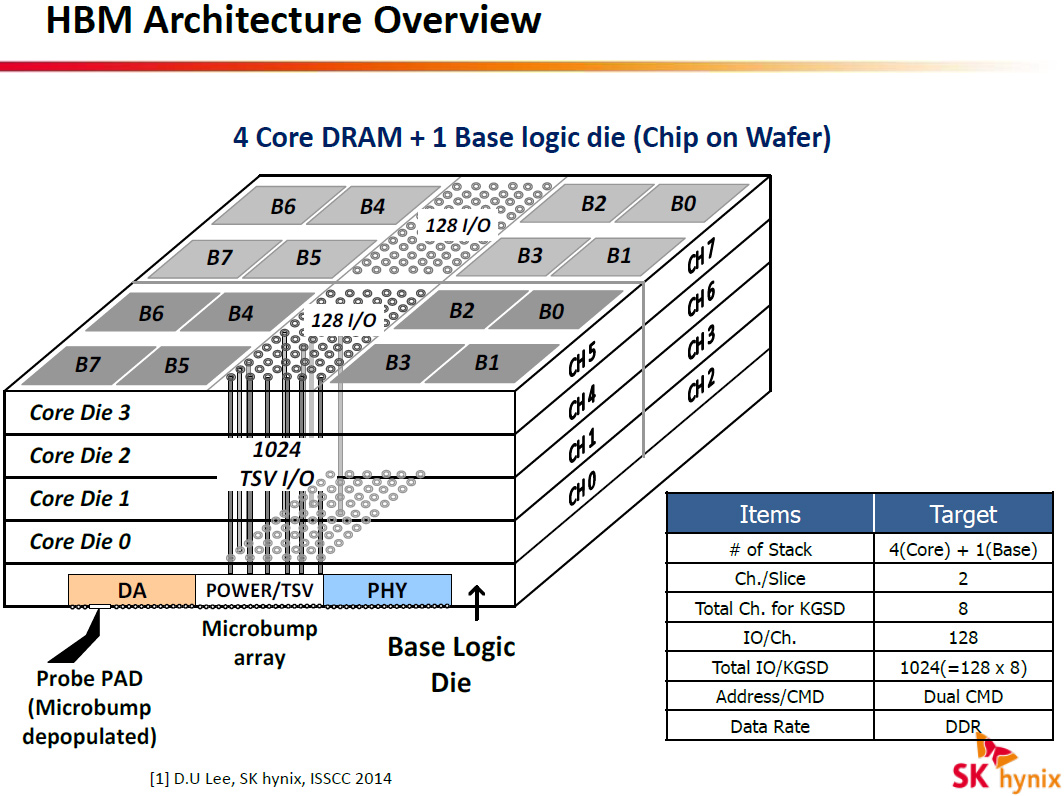

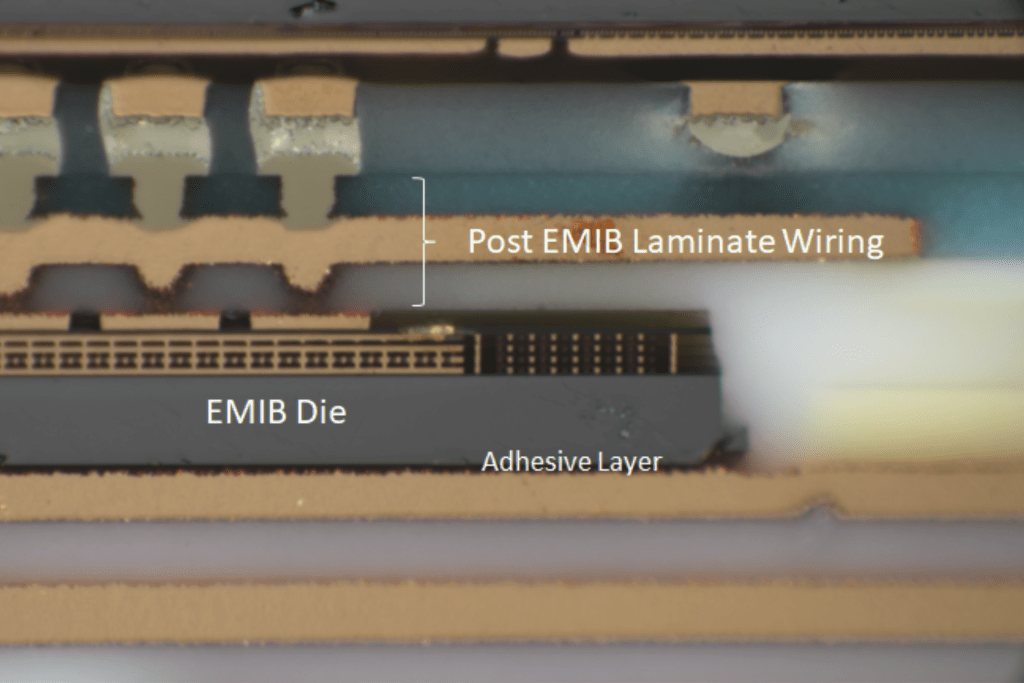

Advanced Packaging Part 2 - Review Of Options/Use From Intel, TSMC, Samsung, AMD, ASE, Sony, Micron, SKHynix, YMTC, Tesla, and Nvidia

What makes HBM2 RAM so 'expensive'? - Quora

Samsung Stuffs 1.2TFLOP AI Processor Into HBM2 to Boost Efficiency, Speed

How designers are taking on AI's memory bottleneck